EdgeAI-AMB82mini

EdgeAI AMB82mini

教材網址:https://github.com/rkuo2000/EdgeAI-AMB82mini

LLM服務器程式範例: [AmebaPro2_server/*.py](https://github.com/rkuo2000/EdgeAI-AMB82mini/blob/main/AmebaPro2_server)

Arduino 程式範例: [Arduino/AMB82-mini/](https://github.com/rkuo2000/EdgeAI-AMB82mini/blob/main/Arduino/AMB82-mini)

自強基金會 WiFi

SSID: TCFSTWIFI.ALL

Pass: 035623116

1. AI 介紹

AI 簡介

AI 硬體介紹

2. 開發板介紹

RTL8735B晶片簡介

32-bit Arm v8M, up to 500MHz, 768KB ROM, 512KB RAM, 16MB Flash (MCM embedded DDR2/DDR3L up to 128MB)

802.11 a/b/g/n WiFi 2.4GHz/5GHz, BLE 5.1, NN Engine 0.4 TOPS, Crypto Engine, Audio Codec, …

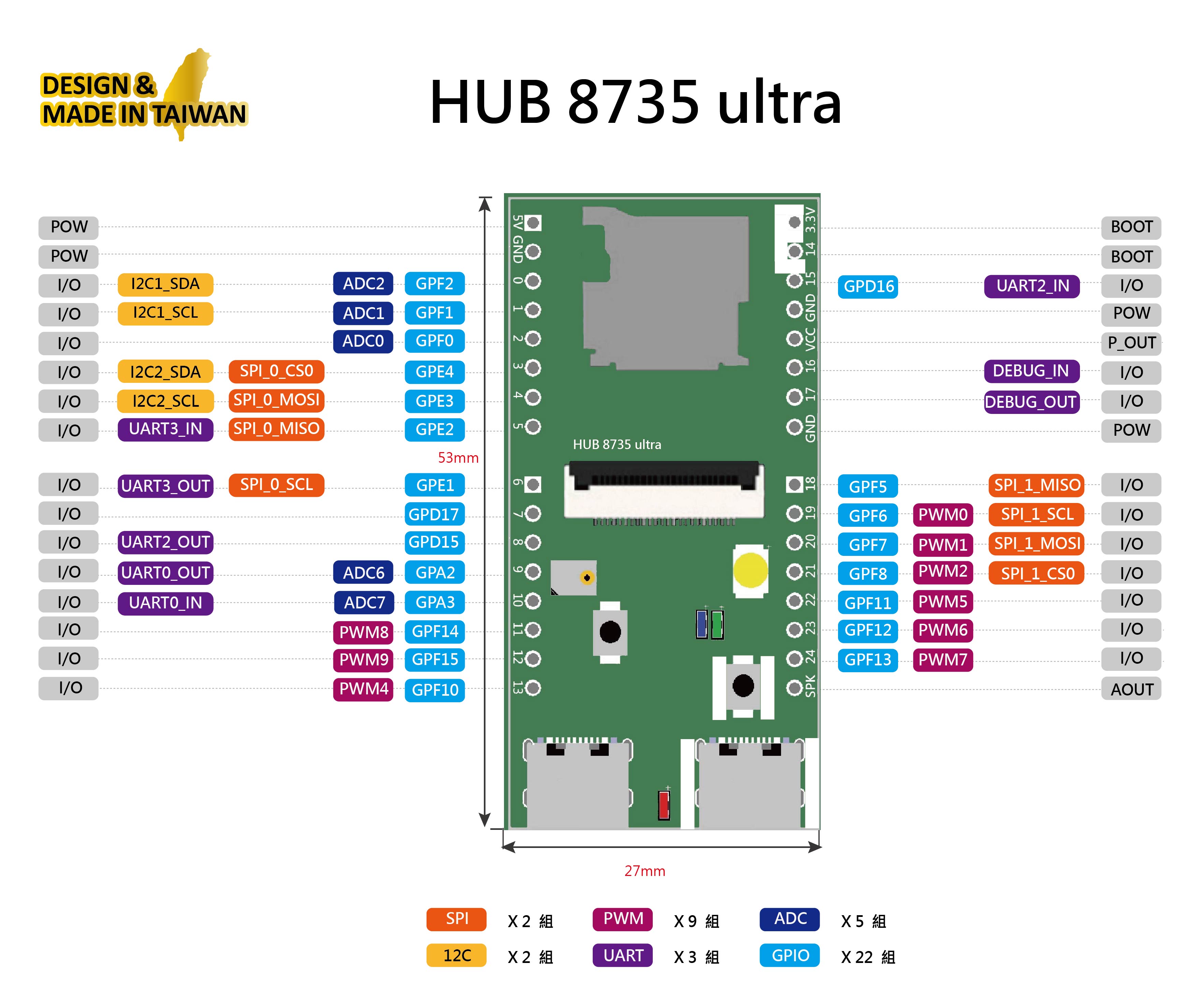

HUB 8735 Ultra

https://github.com/ideashatch/HUB-8735

AMB82-mini

Ameba Arduino

3. IDE使用介紹

Arduino IDE 2.3.7 下載安裝

偏好設定 (Preferences)

Hub8735 ultra

https://raw.githubusercontent.com/ideashatch/HUB-8735/main/amebapro2_arduino/Arduino_package/ideasHatch.json

AMB82-mini

main https://github.com/Ameba-AIoT/ameba-arduino-pro2/raw/main/Arduino_package/package_realtek_amebapro2_index.json

dev https://github.com/Ameba-AIoT/ameba-arduino-pro2/raw/dev/Arduino_package/package_realtek_amebapro2_early_index.json

選定開發板 AMB82-MINI

Tools > Board Manager > Search AMB82 : Realtek Ameba Boards 4.1.0-build20260108

Serial-monitor = 115200 baud

Getting Started

首先將AMB82-mini板子用MicroUSB線 連接至電腦的USB port

確認UART com port (Ubuntu OS需 sudo chown usrname /dev/ttyUSB0)

燒錄程式碼:

- 按下UART_DOWNLOAD按鈕, 再按下RESET按鈕, 放開RESET按鈕, 再放開UART_DOWNLOAD按鈕,板子就進入燒錄模式.

- 然後于Arduino IDE上按下燒錄按鍵

Upload

Realtek AmebaPro2 hardware libraries

C:\Users\user\AppData\Local\Arduino15\packages\realtek\hardware\AmebaPro2\4.0.9-build20250805\libraries

C:\Users\user\AppData\Local\Arduino15\packages\realtek\hardware\AmebaPro2\4.0.9-build20250805\libraries\NeuralNetwork\src

GenAI.h

GenAI.cpp

Arduino examples 範例練習

Examples> 01.Basics > Blink

Examples> 02.Digitial > GPIO > Button

程式碼修改:

const int buttonPin = 1; // the number of the pushbutton pin

const int ledPin = LED_BUILTIN; // the number of the LED pin

Examples> 01.Basic > AnalogReadSerial

程式碼修改:Serial.begin(115200);

AMB82-Mini 程式範例

- 瀏覽器打開 EdgeAI-AMB82mini, 點[Code]並選 [Download ZIP]

- 解壓縮.zip, 並將 Arduino/AMB82-mini 複製到 文件/Arduino下, 成為

文件/Arduino/AMB82-mini

4. Examples 範例練習

MCU Interfaces

WiFi

Examples> WiFi > SimpleTCPServer

WiFi - Simple TCP Server

Examples> WiFi > SimpleHttpWeb > ReceiveData

WiFi - Simple Http Server to Receive Data

Examples> WiFi > SimpleHttpWeb > ControlLED

WiFi - Simple Http Server to Control LED

Sketchbook> AMB82-mini > WebServer_ControlLED

Sketchbook> WebServer_ControlLED

BLE

Exmples> AmebaBLE > BLEV7RC_CAR_VIDEO

MQTT

MQTT is an OASIS standard messaging protocol for the Internet of Things (IoT)

How MQTT Works -Beginners Guide

Examples> AmebaMQTTClient > MQTT_basic

MQTT - Set up MQTT Client to Communicate with Broker

pip install paho.mqtt

publish messages to AMB82-mini

import paho.mqtt.publish as publish

host = "test.mosquitto.org"

publish.single("ntou/edgeai/robot1", "go to the kitchen", hostname=host)

subsribe messages from AMB82-mini

import paho.mqtt.subscribe as subscribe

host = "test.mosquitto.org"https://github.com/rkuo2000/EdgeAI-AMB82mini

msg = subscribe.simple("ntou/edgeai/robot1", hostname=host)

print("%s %s" % (msg.topic, msg.payload.decode("utf-8")))

Gemini MQTT App

Google Gemini +Canvas

Prompt: make an html to input MQTT topic and text to publish through Paho-MQTT test.mosquitto.org

5. 感測器與週邊裝置

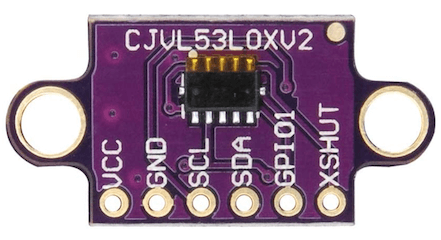

紅外線測距模組

Datasheet: VL53L0X - Time-of-Flight ranging sensor

Sketchbook> AMB82-mini > IR_VL53L0X

慣性感測

Sketchbook > AMB82-mini > MPU6050-DMP6v12

PWM

Examples> AmebaAnalog > PWM_ServoControl

myservo.attach(8);

myservo.write(pos);

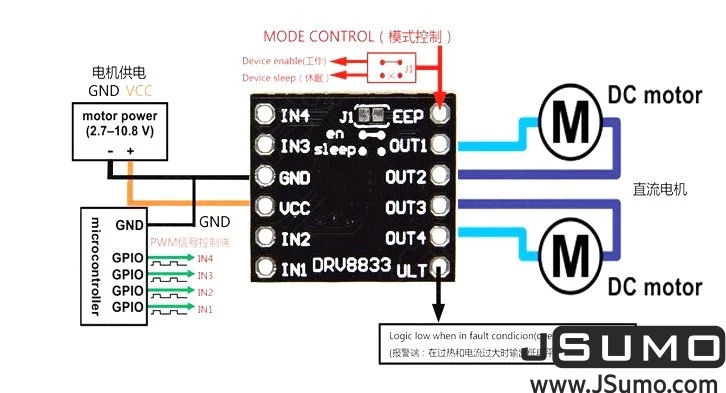

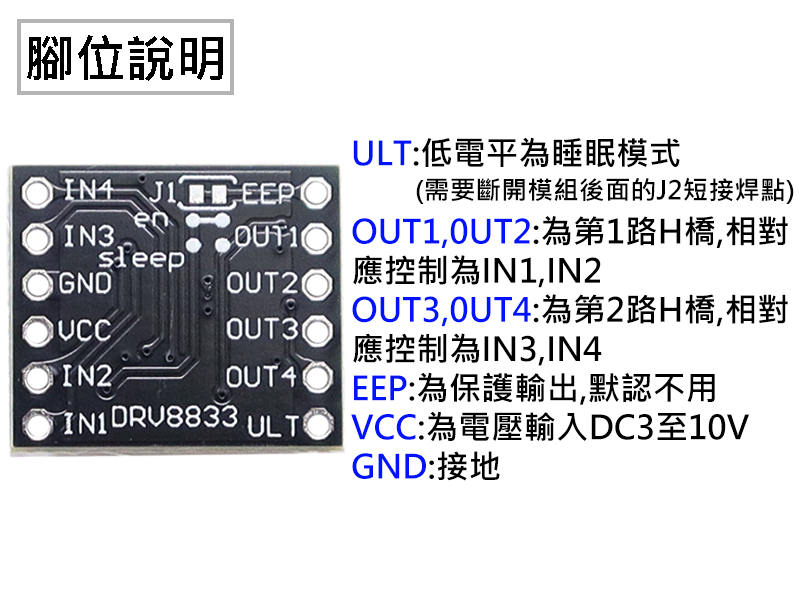

H-bridge 全橋式馬達驅動

TB6612

DRV8833

ILI9341 TFT-LCD

Interface signal names:

- MOSI: Standard SPI Pin

- MISO: Standard SPI Pin

- SLK: Standard SPI Pin

- CS: Standard SPI Pin

- RESET: Used to reboot LCD.

- D/C: Data/Command. When it is at LOW, the signal transmitted are commands, otherwise the data transmitted are data.

- LED (or BL): Adapt the screen backlight. Can be controlled by PWM or connected to VCC for 100% backlight.

- VCC: Connected to 3V or 5V, depends on its spec.

- GND: Connected to GND.

AMB82 MINI and QVGA TFT LCD Wiring Diagram:

Sketchbook> AMB82-mini > HTTP_Post_ImageText_TFTLCD

Exmples> AmebaSPI > Camera_2_lcd

Camera output , then Jpeg Decoder to TFT-LCD

compilation error: need to modify Libraries/TJpg_Decoder/src/User_Config.h

//#define TJPGD_LOAD_SD_LIBRARY

Exmples > AmebaSPI > Camera_2_Lcd_JPEGDEC

Camera output, saved to SDcard, then Jpeg Decoder to read to TFT-LCD

Exmples > AmebaSPI > LCD_Screen_ILI9341_TFT

LCD Draw Tests

6. 影像串流範例練習

影像串流

Examples> AmebaMultimedia > StreamRTSP > VideoOnly

Sketchbook> RTSP_VideoOnly

動作偵測 (motion detection)

Examples> AmebaMultimedia > MotionDetection > LoopPostProcessing

- 修改ssid, passwd, 後燒錄到AMB82-mini,

- 按reset後程式即開始運行, 用serial-monitor 查看顯示串流網址

- 啟動手機或電腦上之VLC player, 設定RTSP串流網址

Motion Detection Google Line Notify

Examples> AmebaMultimedia > MotionDetection > MotionDetectGoogleLineNotify

7. 音頻應用

Audio & Mic

音頻環回測試

Examples> AmebaMultimedia > Audio >LoopbackTest

MP3 播放

AMB82-mini + PAM8403 + 4ohm 3W speaker

Sketchbook> AMB82-mini > SDCardPlayMP

- .mp3 files stored under mp3 directory

Sketchbook> AMB82-mini > SDCardPlayMP_All

音頻串流範例

Examples> AmebaMultimedia > Audio > RTSPAudioStream

MP4錄音範例

Examples> AmebaMultimedia > RecordMP4 > AudioOnly

音頻分類範例

Examples> AmebaNN > AudioClassification

8. 人臉辨識與識別

人臉辨識與識別介紹

人臉檢測範例

Examples> AmebaNN > RTSPFaceDetection

人臉識別範例

Examples> AmebaNN > RTSPFaceRecognition

Serial_monitor: REG=RKUO

- Enter the command REG=Name to give the targeted face a name.

- Enter the command DEL=Name to delete a certain registered face. For example,

DEL=SAM - Enter the command BACKUP to save a copy of registered faces to flash.

- If a backup exists, enter the command RESTORE to load registered faces from flash.

- Enter the command RESET to forget all previously registered faces.

9. 影像分類 (Image Classification)

影像分類範例

RTSP_GarbageClassification.ino

Garbage模型訓練與檔案轉換

模型訓練: kaggle.com/rkuo2000/garbage-cnn

required in kaggle for AmebaPro2 1) pip install tensorflow==2.14.1 2) model.save(‘garbage_cnn.h5’, include_optimizer=False)

模型轉換:AMB82 AI Model Conversion

- Download garbage_cnn.h5 from kaggle.com/rkuo2000/garbage-cnn

Output - Compress garbage_cnn.h5 to garbage_cnn.zip

- Go to Amebapro2 AI convert model, fill up your E-mail

- Upload garbage_cnn.zip

- Upload one (.jpg) test picture (EX. glass100.jpg from Garbage dataset)

- Email will be sent to you for the link of

network_binary.nb

程式範例:RTSP_GarbageClassification.ino

- click the recieved Email link to download

network_binary.nb - create NN_MDL folder in SDcard, save network_binary.nb under NN_MDL folder, and rename it to

imgclassification.nb - plugin SDcard back to AMB82-MINI

- modify Sketch RTSP_GarbageClassification.ino 1) modify SSID and PASSWD 2) modify imgclass.modelSelect (change DEFAULT_IMGCLASS to CUSTOMIZED_IMGCLASS)

- burn code into board AMB82-MINI, and run it with VLC player streaming

10. 物件偵測 (Object Detection)

Public Dataset

物件檢測介紹

Kaggle範例:

- YOLOv7 Facemask detection

- YOLOv7 Pothole detection

- YOLOv7 Sushi detection

- YOLOv7 refrigeratoryfood

- YOLOv7 reparm

Pothole模型訓練與檔案轉換

模型訓練: kaggle.com/rkuo2000/yolov7-pothole

1) repro https://github.com/WongKinYiu/yolov7

2) create pothole.yaml

%%writefile data/pothole.yaml

train: ./Datasets/pothole/train/images

val: ./Datasets/pothole/valid/images

test: ./Datasets/pothole/test/images

# Classes

nc: 1 # number of classes

names: ['pothole'] # class names

3) YOLOv7-Tiny Fixed Resolution Training

!sed -i "s/nc: 80/nc: 1/" cfg/training/yolov7-tiny.yaml

!sed -i "s/IDetect/Detect/" cfg/training/yolov7-tiny.yaml

模型轉換:AMB82 AI Model Conversion

- Download

best.ptfrom kaggle.com/rkuo2000/yolov7-pothole - Compress best.pt to

best.zip - Go to Amebapro2 AI convert model, fill up your E-mail

- Upload best.zip

- Upload one (.jpg) test picture (EX. pothole_test.jpg from Pothole dataset)

- Email will be sent to you for the link of

network_binary.nb

程式範例:RTSP_YOLOv7_Pothole_Detection.ino

- click the recieved Email link to download

network_binary.nb - create NN_MDL folder in SDcard, save network_binary.nb under NN_MDL folder, and rename it to

yolov7_tiny.nb - plugin SDcard back to AMB82-MINI

- modify Sketch RTSP_YOLOv7_Pothole_Detection.ino 1) modify SSID and PASSWD 2) modify ObjDet.modelSelect(OBJECT_DETECTION, CUSTOMIZED_YOLOV7TINY, NA_MODEL, NA_MODEL);

- burn code into board AMB82-MINI, and run it with VLC player streaming

AMB82 Mini - 物件偵測範例

RTSP_YOLOv7_Pothole

RTSP_YOLOv7_Sushi

AMB82 Mini - SD卡加載模型範例

RTPS_ObjectDetection_AudioClassification.ino

AMB82 Mini - 綫上AI 模型轉換工具

11. 大型語言模型範例 (LLM)

語音辨識範例

ffmpeg.exe is needed for Windows to run Whisper!

語音交談範例

ffmpeg.exe is needed for Windows to run Whisper!

Download ffmpeg-master-latest-win64-gpl.zip, extract & put ffmpeg.exe into where you run Whisper server.

AmebaPro2 Whisper LLM_server

AmebaPro2_Whisper_Gemini_server

- 到 https://aistudio.google.com/app/apikey 取得API_Key 填入GOOGLE_API_KEY, 再執行AmebaPro2_Whisper_Gemini_server.py

HTTP_Post_Audio.ino

- 修改server IP位址 in RecordMP4_HTTP_Post_Audio.ino server IP位址, then 燒錄到 AMB82-MINI

- reset AMB82-MINI 來啟動, 按鍵兩秒後即可錄音詢問 LLM/Gemini

12. 視覺語言模型 (VLM)

影像+語音交談範例

13. GenAI

Examples: AmebaNN > MultimediaAI > GenAIVision

Examples: AmebaNN > MultimediaAI > GenAISpeech_Gemini

Examples: AmebaNN > MultimediaAI > TextToSpeech