Visual-Inertial Navigation System

Introduction to Datasets VIO, OpenVINS, VINS-Mono, VINS-Fusion, cm-Level GPS positioning, and (EuRoC-MAV, TUM Visual-Inertial Dataset, UZH-FPV Drone Racing Dataset, KAIST Urban Dataset, KAIST VIO Dataset, KITTI Vision Benchmark suite, Visual Odometry / SLAM Evaluation).

Visual-Inertial odometry (VIO)

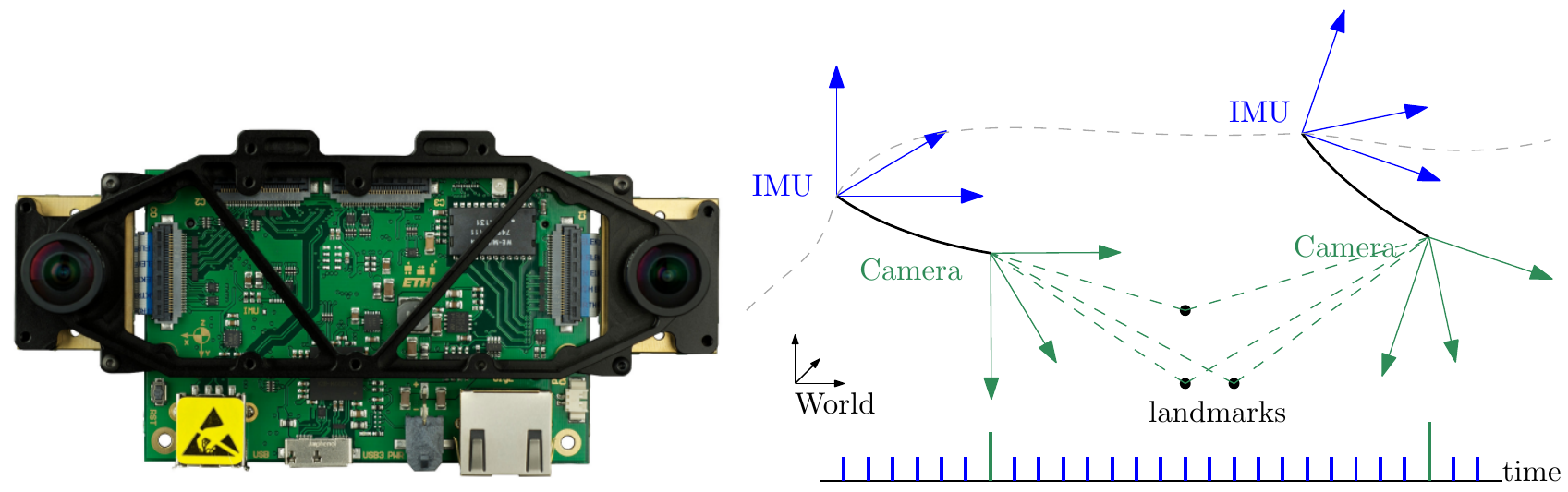

Visual-Inertial odometry (VIO) is the process of estimating the state (pose and velocity) of an agent (e.g., an aerial robot) by using only the input of one or more cameras plus one or more Inertial Measurement Units (IMUs) attached to it.

Visual-Inertial Odometry of Aerial Robots

Fisher Information Field for Active Visual Localization

A Tutorial on Quantitative Trajectory Evaluation for Visual(-Inertial) Odometry

On the Comparison of Gauge Freedom Handling in Optimization-based Visual-Inertial State Estimation

Active Exposure Control for Robust Visual Odometry in High Dynamic Range (HDR) Environments

SVO 2.0 Semi-Direct Visual Odometry

SVO 2.0: Semi-Direct Visual Odometry for Monocular and Multi-Camera Systems

1-point RANSAC

Given a car equipped with an omnidirectional camera, the motion of the vehicle can be purely recovered from salient features tracked over time. We propose the 1-Point RANSAC algorithm which runs at 800 Hz on a normal laptop.

Visual-Inertial Odometry Benchmarking

ROVIO

Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback

OKVIS

Paper: OKVIS: Keyframe-Based Visual-Inertial SLAM using Nonlinear Optimization

Github: https://github.com/ethz-asl/okvis_ros

OpenINVS

paper: OpenVINS: A Research Platform for Visual-Inertial Estimation

The core filter is an Extended Kalman filter which fuses inertial information

with sparse visual feature tracks. These visual feature tracks are fused leveraging the Multi-State Constraint Kalman Filter (MSCKF) sliding window formulation which allows for 3D features to update the state estimate without directly estimating the feature states in the filter.

VINS-Mobile

VINS-Mono

VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator

vins_estimator

- factor:

- imu_factor.h:IMU残差、雅可比

- intergration_base.h:IMU预积分

- marginalization.cpp/.h:边缘化

- pose_local_parameterization.cpp/.h:局部参数化

- projection_factor.cpp/.h:视觉残差

- initial:

- initial_alignment.cpp/.h:视觉和IMU校准(陀螺仪偏置、尺度、重力加速度和速度)

- initial_ex_rotation.cpp/.h:相机和IMU外参标定

- initial_sfm.cpp/.h:纯视觉SFM、三角化、PNP

- solve_5pts.cpp/.h:5点法求基本矩阵得到Rt

- utility:

- CameraPoseVisualization.cpp/.h:相机位姿可视化

- tic_toc.h:记录时间

- utility.cpp/.h:各种四元数、矩阵转换

- visualization.cpp/.h:各种数据发布

- estimator.cpp/.h:紧耦合的VIO状态估计器实现

- estimator_node.cpp:ROS 节点函数,回调函数

- feature_manager.cpp/.h:特征点管理,三角化,关键帧等

- parameters.cpp/.h:读取参数

VINS-Fusion

A General Optimization-based Framework for Local Odometry Estimation with Multiple Sensors

VI-Car

EuRoC-MAV

KITTI

- KITTI Odometry (Stereo)

- Download KITTI Odometry dataset to YOUR_DATASET_FOLDER.

roslaunch vins vins_rviz.launch- (optional)

rosrun loop_fusion loop_fusion_node ~/catkin_ws/src/VINS-Fusion/config/kitti_odom/kitti_config00-02.yaml rosrun vins kitti_odom_test ~/catkin_ws/src/VINS-Fusion/config/kitti_odom/kitti_config00-02.yaml YOUR_DATASET_FOLDER/sequences/00/

- KITTI GPS Fusion (Stereo + GPS)

- Download KITTI raw dataset to YOUR_DATASET_FOLDER. Take 2011_10_03_drive_0027_synced for example.

roslaunch vins vins_rviz.launchrosrun vins kitti_gps_test ~/catkin_ws/src/VINS-Fusion/config/kitti_raw/kitti_10_03_config.yaml YOUR_DATASET_FOLDER/2011_10_03_drive_0027_sync/rosrun global_fusion global_fusion_node

- Recalibrating the KITTI Dataset Camera Setup for Improved Odometry Accuracy

VINS-Fusion 代碼解析

Improved VINS-Mono

PL-VIO

PL-VIO: Tightly-Coupled Monocular Visual–Inertial Odometry Using Point and Line Features

|

|

Code: HeYijia/PL-VIO

Code: HeYijia/PL-VIO

PL-VINS

PL-VINS: Real-Time Monocular Visual-Inertial SLAM with Point and Line Features

The Blue rectangles represent the differences from VINS-Mono

The Blue rectangles represent the differences from VINS-Mono

Code

Code

The build took ~37 hours on i7-920 @2.67GHz !!!

The build took ~37 hours on i7-920 @2.67GHz !!!

MH_01_easy

MH_05_difficult

MH_05_difficult

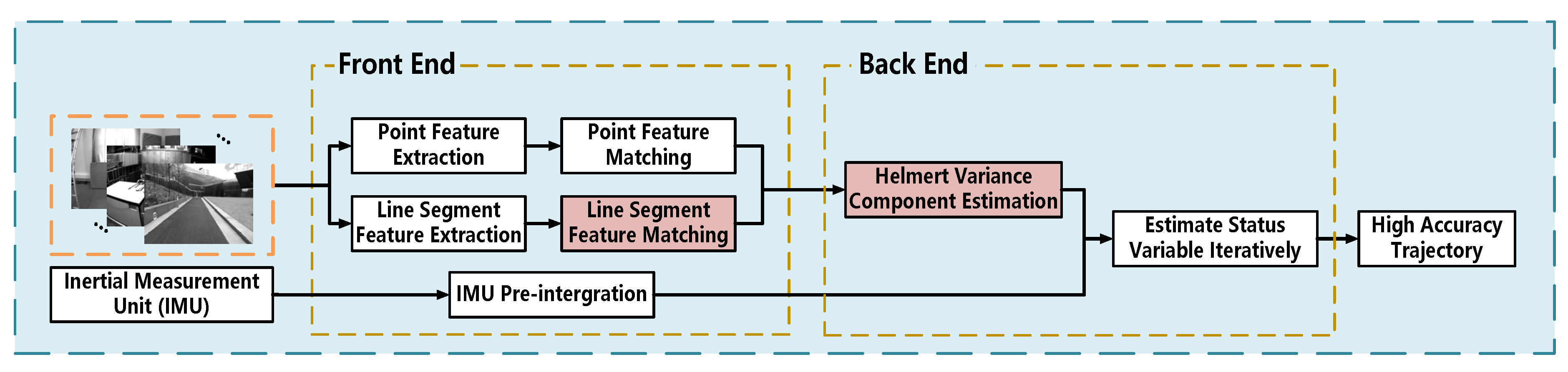

IPL-VIO

Improved Point–Line Visual–Inertial Odometry System Using Helmert Variance Component Estimation

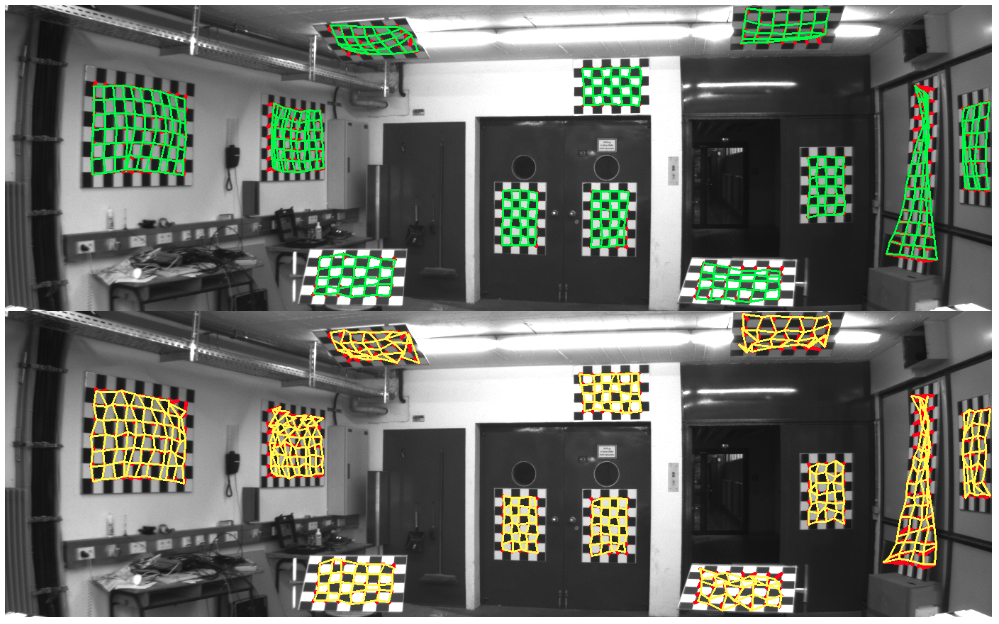

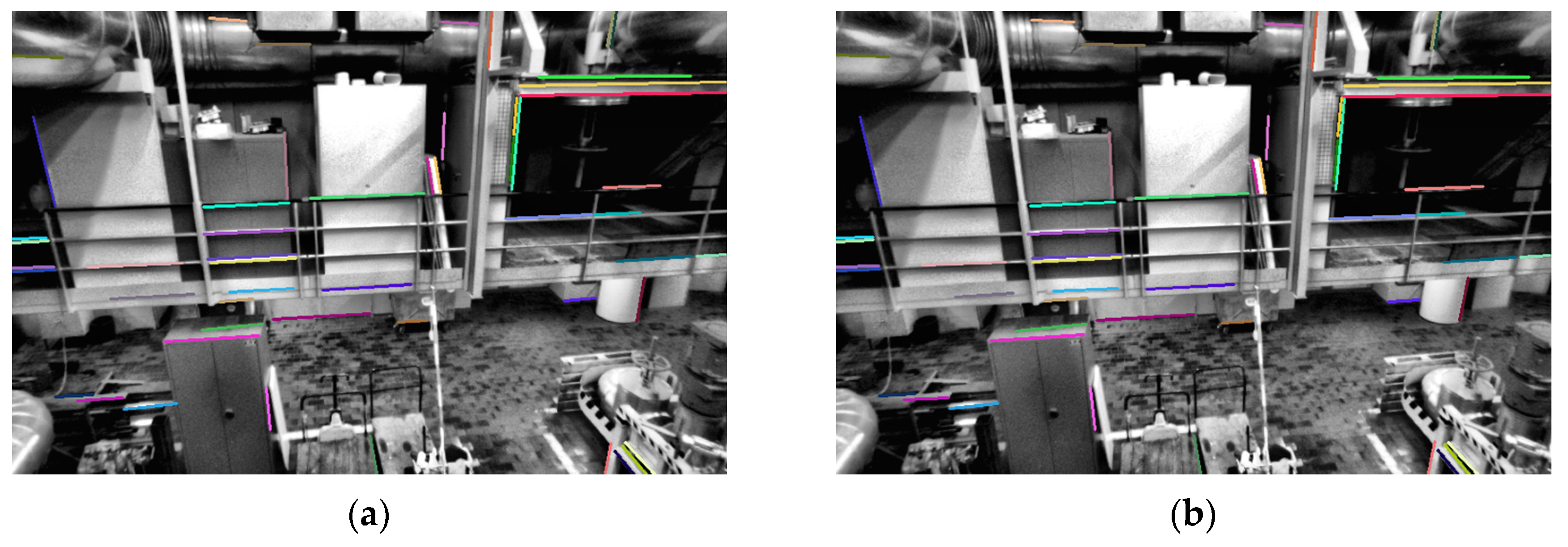

Figure 6 Comparision of the matching effect between line binary descriptors(LBD) matching method and improved mathcing method on the MH_02_easy dataset.

Images(a,b) are LBD descriptor matching scenes - the line of the same color is the corresponding matching line; (c,d) are the matching scenes of the improved matching method, and the line of the same color is the corresponding matching line.

Figure 6 Comparision of the matching effect between line binary descriptors(LBD) matching method and improved mathcing method on the MH_02_easy dataset.

Images(a,b) are LBD descriptor matching scenes - the line of the same color is the corresponding matching line; (c,d) are the matching scenes of the improved matching method, and the line of the same color is the corresponding matching line.

PLS-VIO

Leveraging Structural Information to Improve Point Line Visual-Inertial Odometry

Code

Code

RK-VIF

An improved SLAM based on RK-VIF: Vision and inertial information fusion via Runge-Kutta method

-

Visual-inertial information fusion framework

-

The process of PROSAC algorithm

The PROSAC is based on the assumption that “the points with good quality are more likely to be interior points”.

-

This paper uses the Runge-Kutta method to discretize the IMU motion formula to improve the pre-integration accuracy. The basic idea of the Runge-Kutta method is to estimate the derivatives of multiple points in the integration interval, take the weighted average of these derivatives to obtain the average derivative, and then use the average derivative to estimate the result at the end of the integration interval. The formula of the fourth-order Runge-Kutta algorithm is as follows:

-

Sensor frequency

-

VINS state and constraints

-

Sliding window optimization range

-

Loop detection process

After the system successfully detects the loop, it can establish the constraint relationship between the two frames through feature matching. Firstly, we need to fuse the map points matched by the loop and eliminate redundant map points. Then calculate the relative pose between the two keyframes according to Perspective-N-Point (PnP) algorithm, and correct the current keyframe’s pose. In addition, other keyframes in the loop also have different degrees of accumulated error and need to be globally optimized.

After the system successfully detects the loop, it can establish the constraint relationship between the two frames through feature matching. Firstly, we need to fuse the map points matched by the loop and eliminate redundant map points. Then calculate the relative pose between the two keyframes according to Perspective-N-Point (PnP) algorithm, and correct the current keyframe’s pose. In addition, other keyframes in the loop also have different degrees of accumulated error and need to be globally optimized. -

Globally optimized pose

-

Dataset experiment

The RMSE in EuRoC Dataset (unit: m)

GPS-VIO

[The Integration of GPS-BDS Real-Time Kinematic Positioning and Visual–Inertial Odometry Based on Smartphones]

Paper: (https://www.mdpi.com/2220-9964/10/10/699/pdf)

Tightly-coupled Fusion of Global Positional Measurements in Optimization-based Visual-Inertial Odometry

Paper: https://arxiv.org/abs/2003.04159

|

|

GVINS

Paper: GVINS: Tightly Coupled GNSS-Visual-Inertial Fusion for Smooth and Consistent State Estimation

Tightly Coupled Optimization-based GPS-Visual-Inertial Odometry with Online Calibration and Initialization

Paper: https://arxiv.org/abs/2203.02677

|

|

EMA-VIO: Deep Visual-Inertial Odometry with External Memory Attention

Paper: https://arxiv.org/abs/2209.08490

Benchmarking VI Deep Multimodal Fusion

Paper: ttps://arxiv.org/abs/2208.00919

EMV-LIO: An Efficient Multiple Vision aided LiDAR-Inertial Odometry

Paper: https://arxiv.org/abs/2302.00216

Datasets

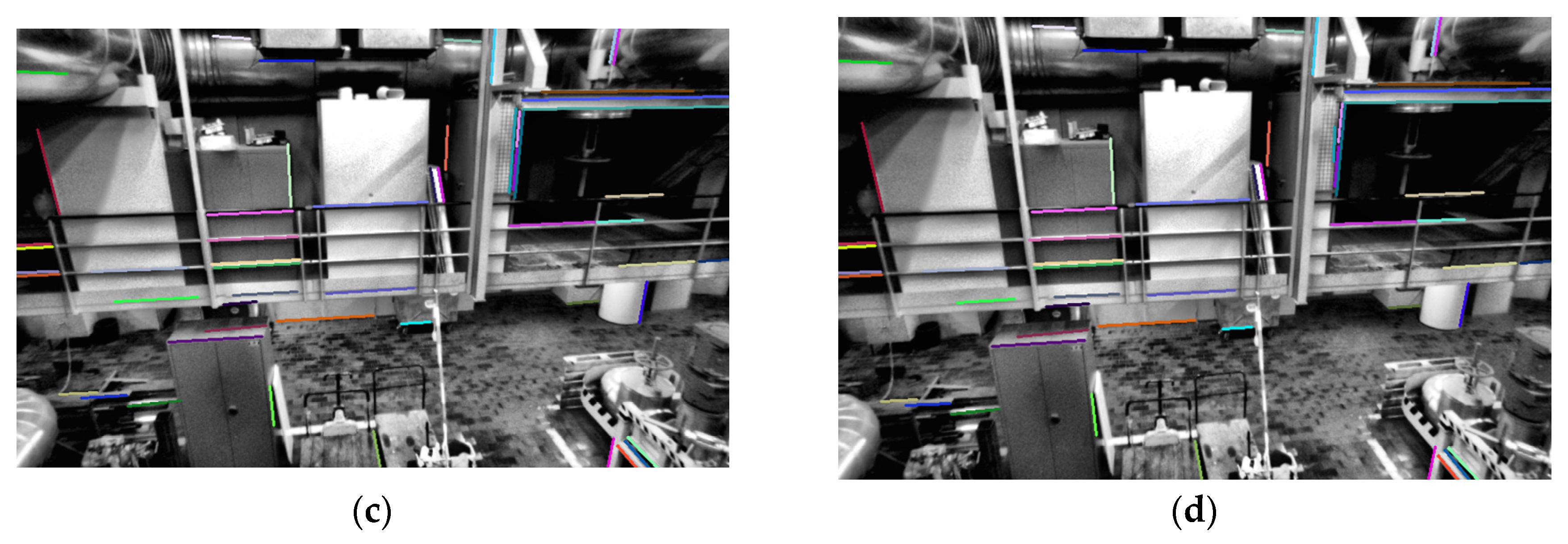

EuRoC MAV Dataset

The ETH ASL EuRoC MAV dataset is one of the most used datasets in the visual-inertial / simultaneous localization and mapping (SLAM) research literature.

|

Platform and Sensors

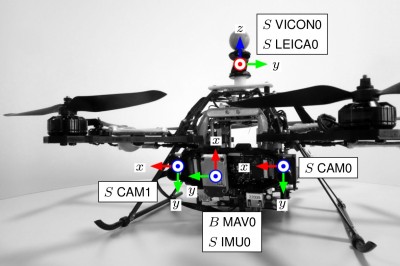

KITTI Vision Benchmark Suite

This site was last updated October 07, 2024.