Agents

Introduction to AI Agents, Langchain, SWE.

Agents

LLM Agent Paper List

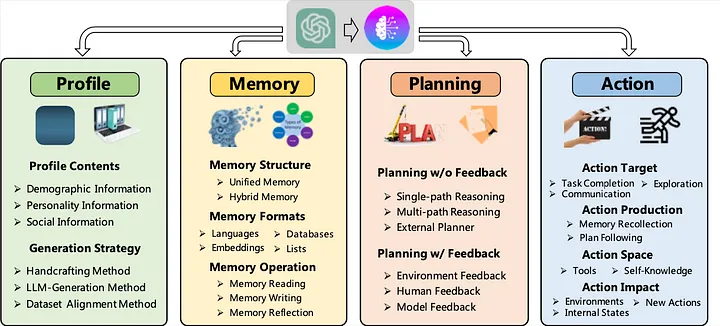

Paper: The Rise and Potential of Large Language Model Based Agents: A Survey

Survey of LLM-based AI Agents

Paper: An In-depth Survey of Large Language Model-based Artificial Intelligence Agents

Blog: 4 Autonomous AI Agents you need to know

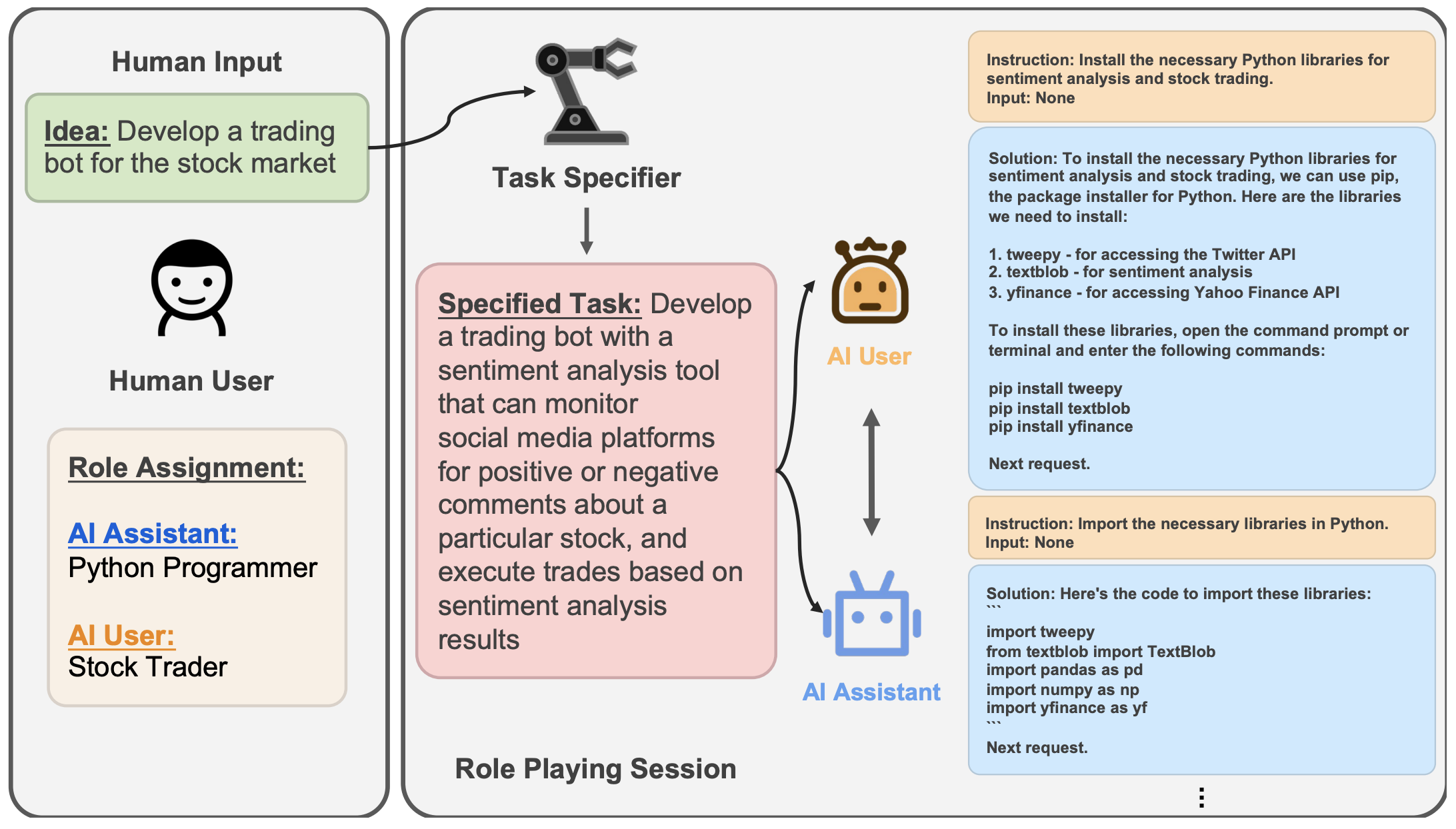

Camel

Communicative Agents for “Mind” Exploration of Large Language Model Society

Code: https://github.com/camel-ai/camel

AutoGPT

Paper: Auto-GPT for Online Decision Making: Benchmarks and Additional Opinions

Code: https://github.com/Significant-Gravitas/Auto-GPT

Blog: AutoGPT architecture & breakdown

Tutorials: AutoGPT Forge

AgentGPT

Code: https://github.com/reworkd/AgentGPT

BabyAGI

Blog: Task-driven Autonomous Agent Utilizing GPT-4, Pinecone, and LangChain for Diverse Applications

Colab:

Godmode

Voyager

Paper: Voyager: An Open-Ended Embodied Agent with Large Language Models

Code: https://github.com/MineDojo/Voyager

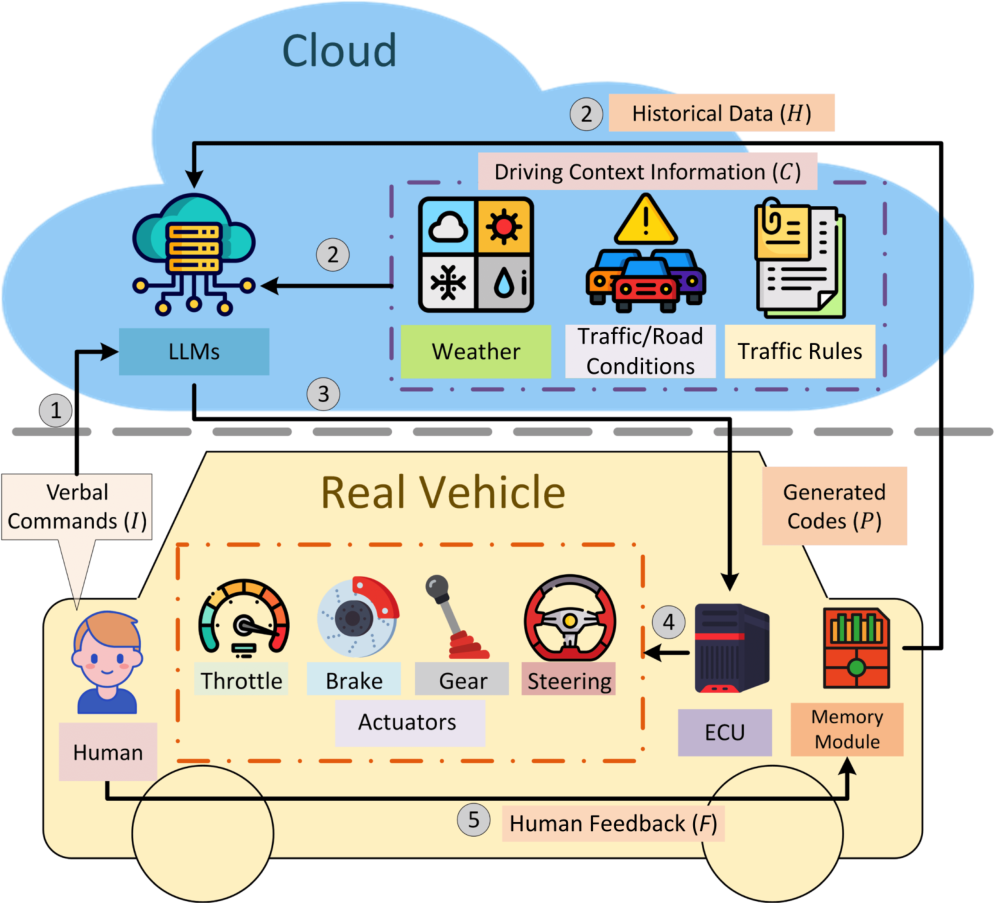

Talk2Drive

Paper: Large Language Models for Autonomous Driving: Real-World Experiments

MemGPT

Paper: MemGPT: Towards LLMs as Operating Systems

Code: https://github.com/cpacker/MemGPT

RL-GPT

Paper: RL-GPT: Integrating Reinforcement Learning and Code-as-policy

MC-Planner

Paper: https://arxiv.org/abs/2302.01560

Code: https://github.com/CraftJarvis/MC-Planner

Multi-Agent

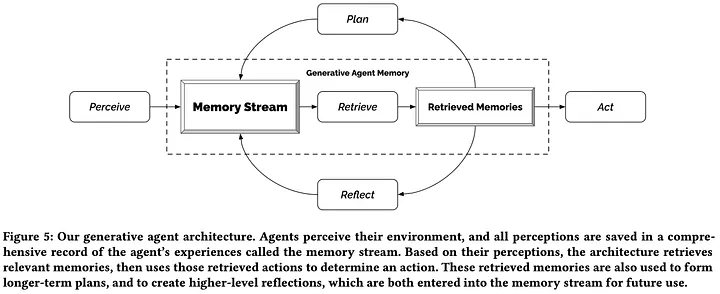

Generative Agents

Paper: Generative Agents: Interactive Simulacra of Human Behavior«br>

Code: https://github.com/joonspk-research/generative_agents

Demo

Blog: Paper Review: Generative Agents: Interactive Simulacra of Human Behavior

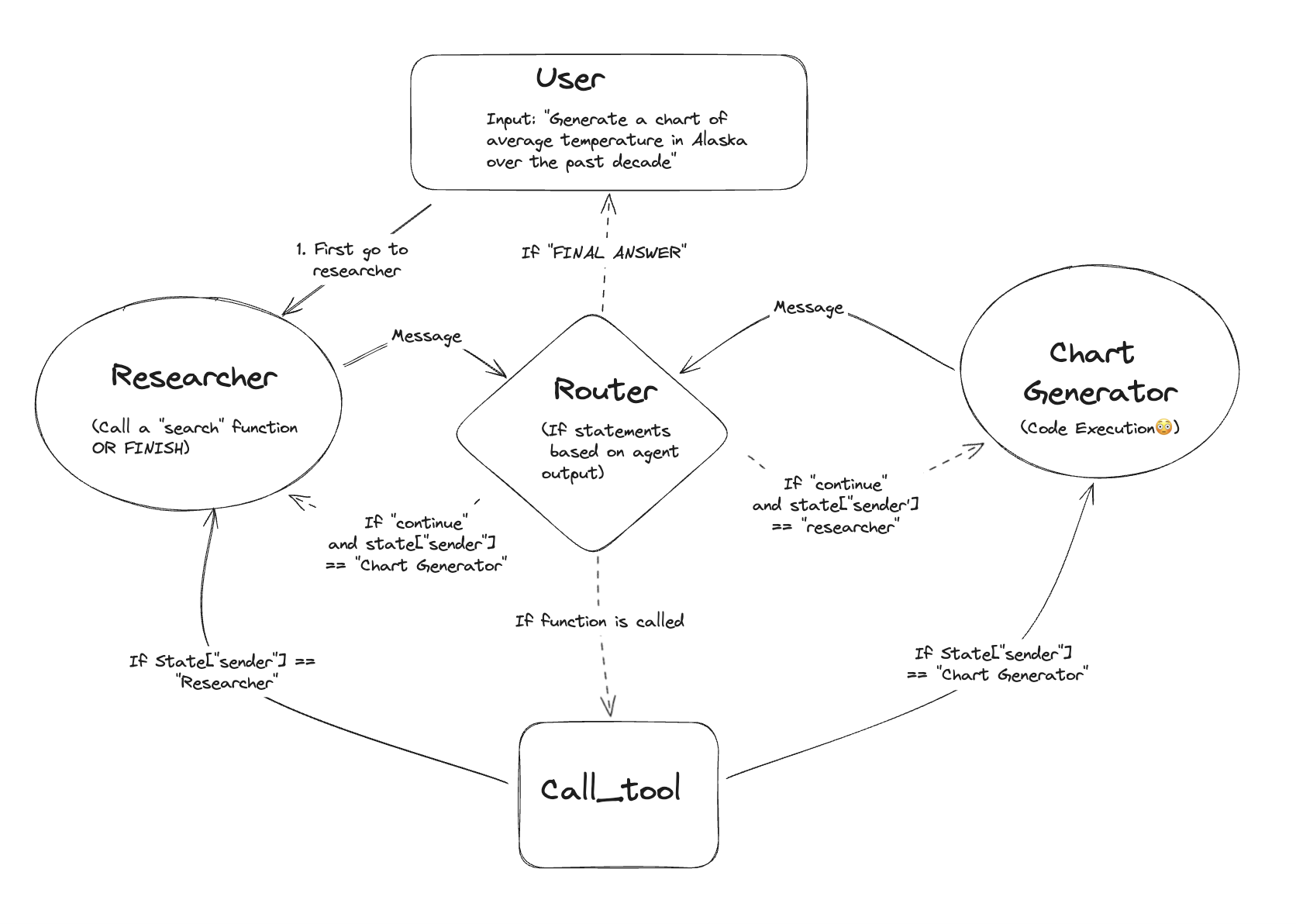

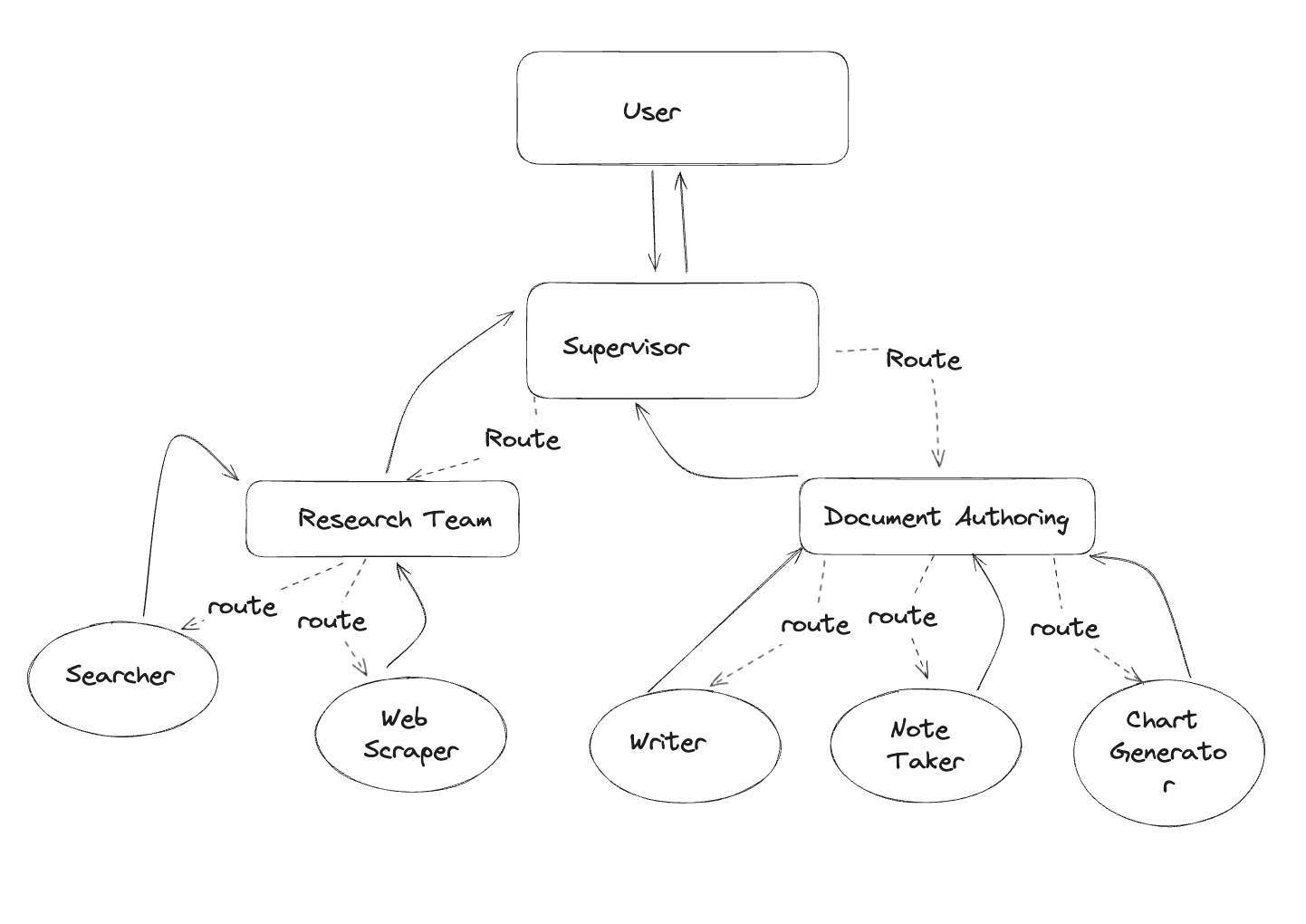

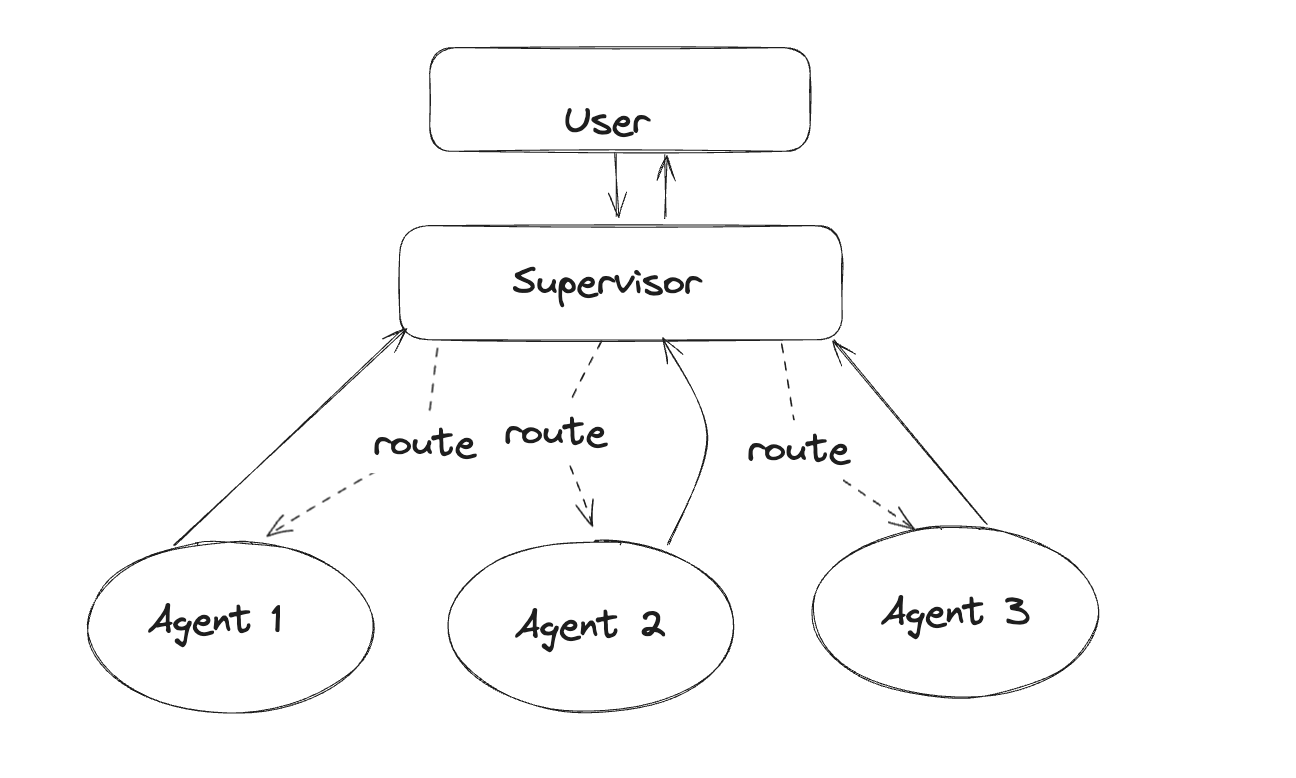

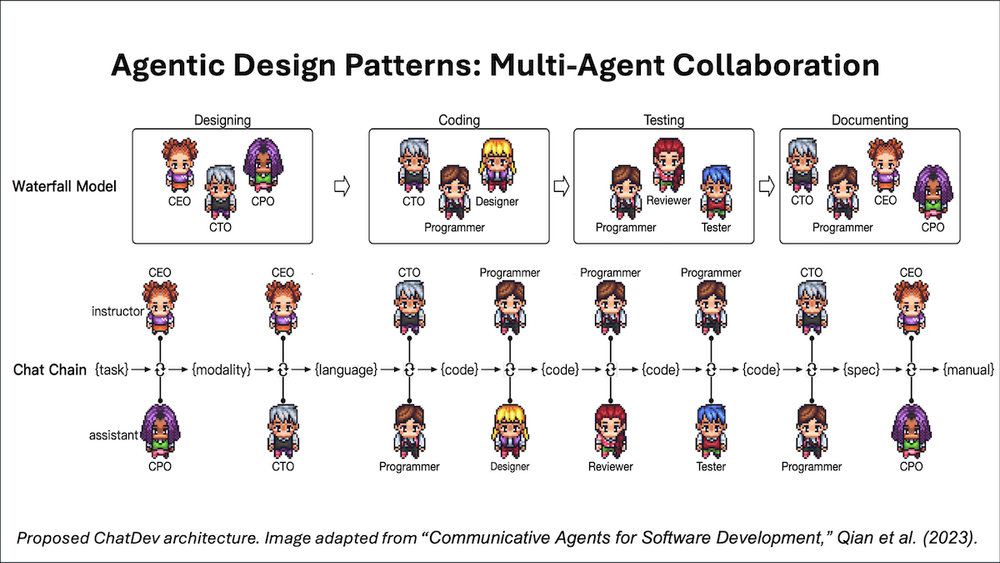

Multi-Agent Collaboration

- “Communicative Agents for Software Development,” Qian et al. (2023) (the ChatDev paper)

- “AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation,” Wu et al. (2023)

- “MetaGPT: Meta Programming for a Multi-Agent Collaborative Framework,” Hong et al. (2023)

Multi-Agent examples

LangGraph + Llama3 + Groq

Colab: https://drp.li/X3hpZ

Frameworks

LangChain

LangGraph

SWE

OpenDevin

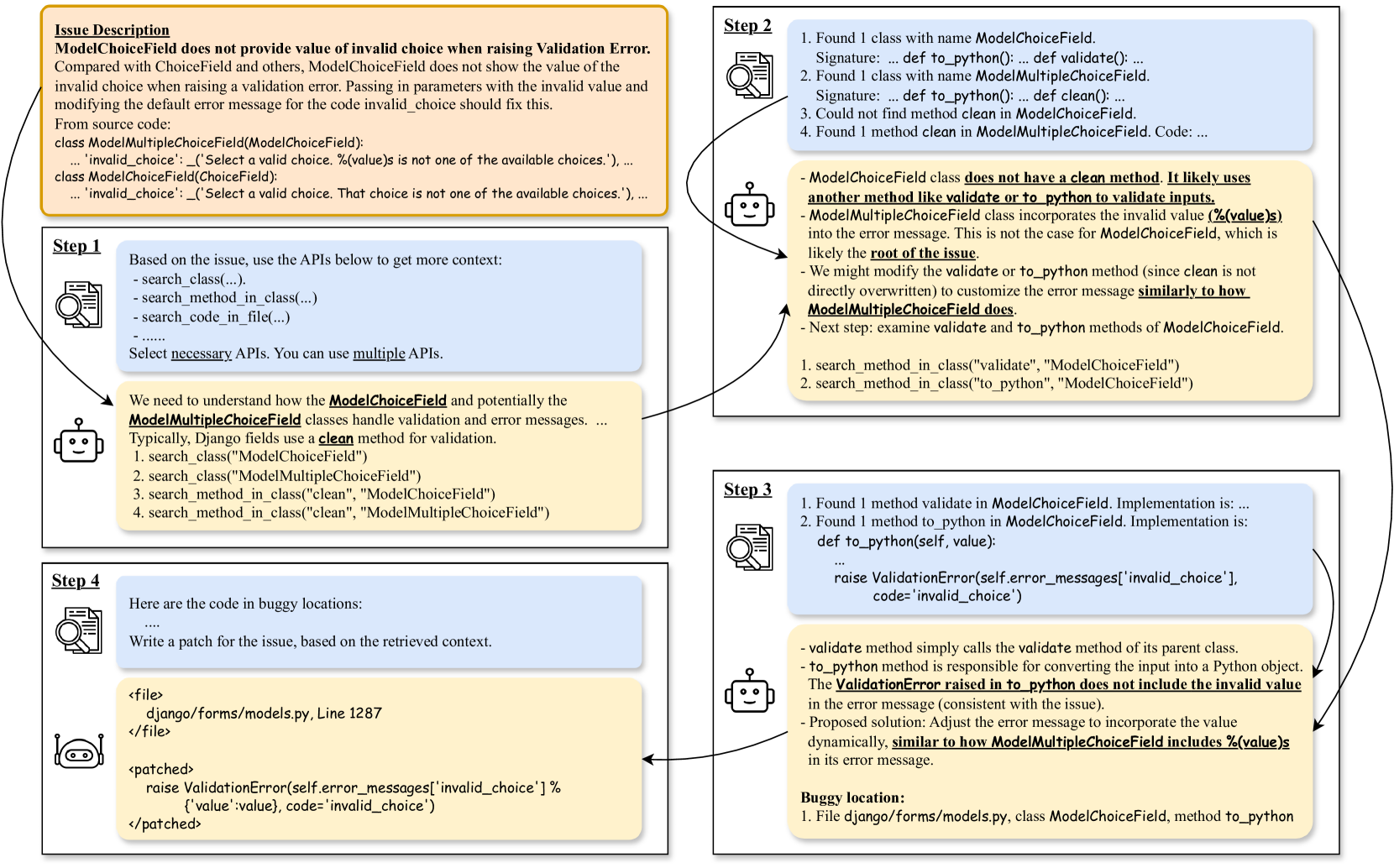

Paper: SWE-AGENT: AGENT-COMPUTER INTERFACES ENABLE AUTOMATED SOFTWARE ENGINEERING

Code: https://github.com/OpenDevin/OpenDevin

Docs: OpenDevin Intro

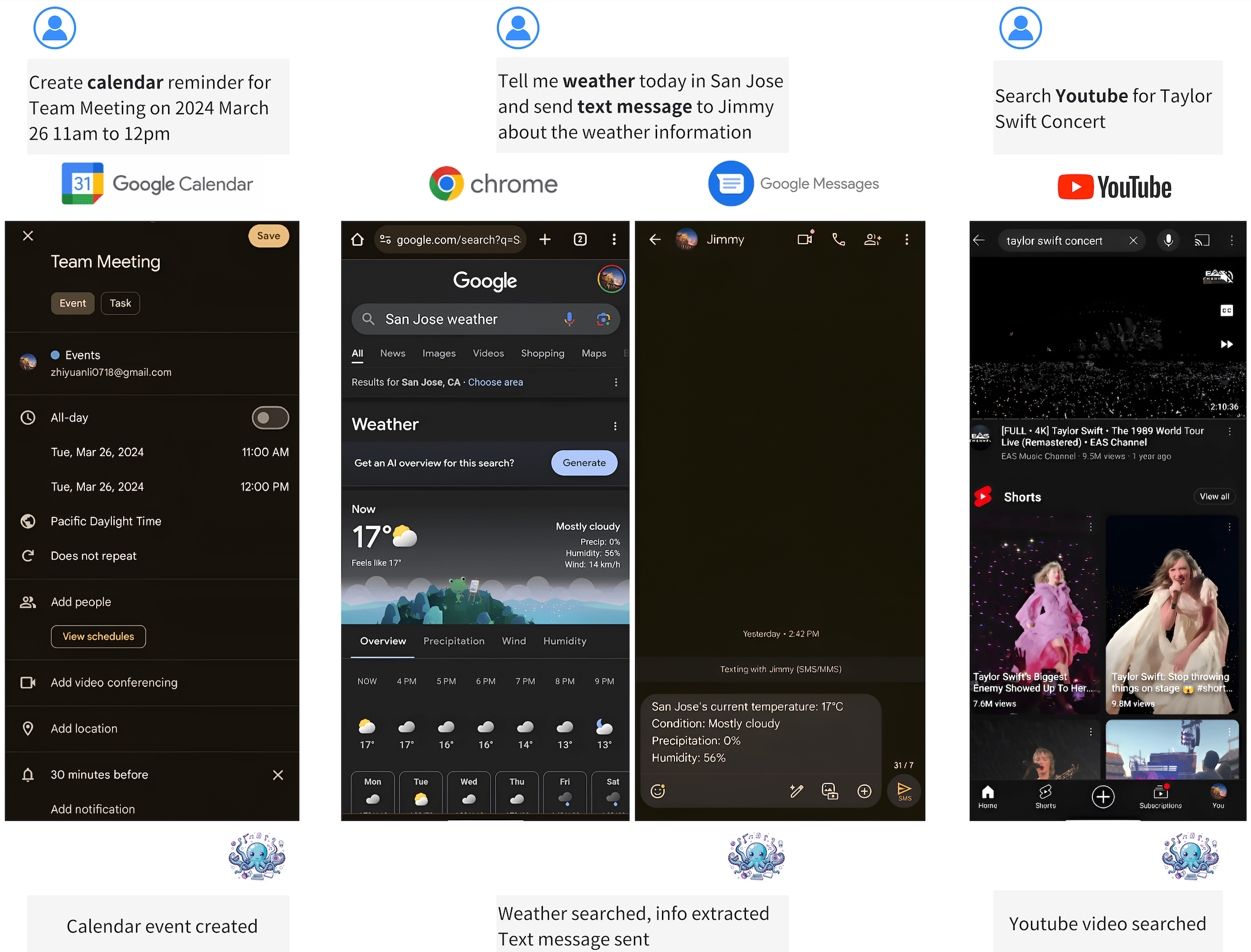

DroidAgent

Paper: Autonomous Large Language Model Agents Enabling Intent-Driven Mobile GUI Testing

Code: DroidAgent: Intent-Driven Android GUI Testing with LLM Agents

WebVoyager

Paper: WebVoyager: Building an End-to-End Web Agent with Large Multimodal Models

Code: https://github.com/MinorJerry/WebVoyager

AutoCodeRover

Paper: AutoCodeRover: Autonomous Program Improvement

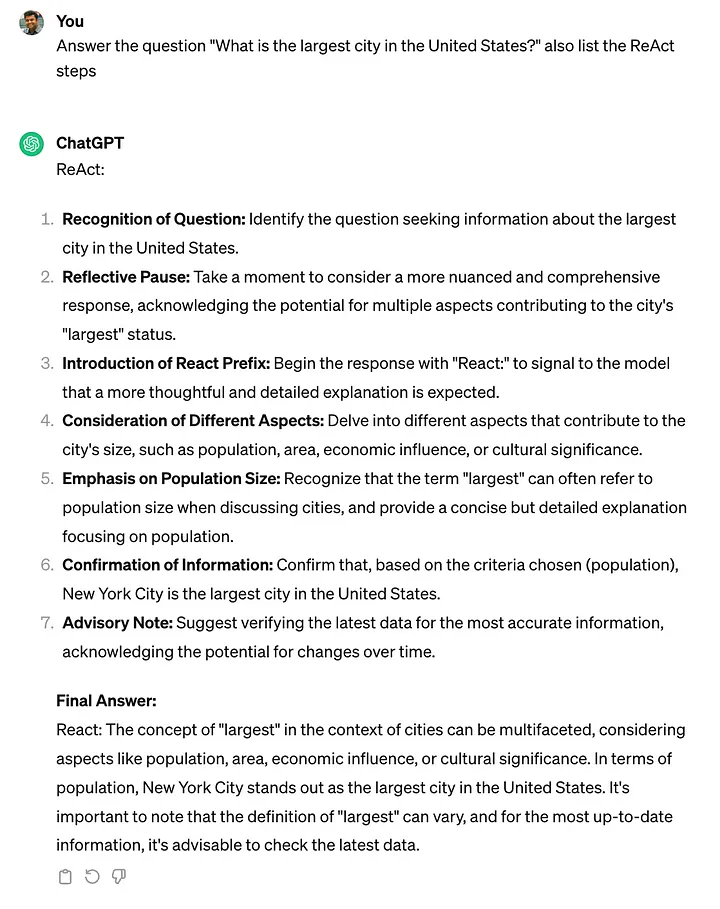

ReAct

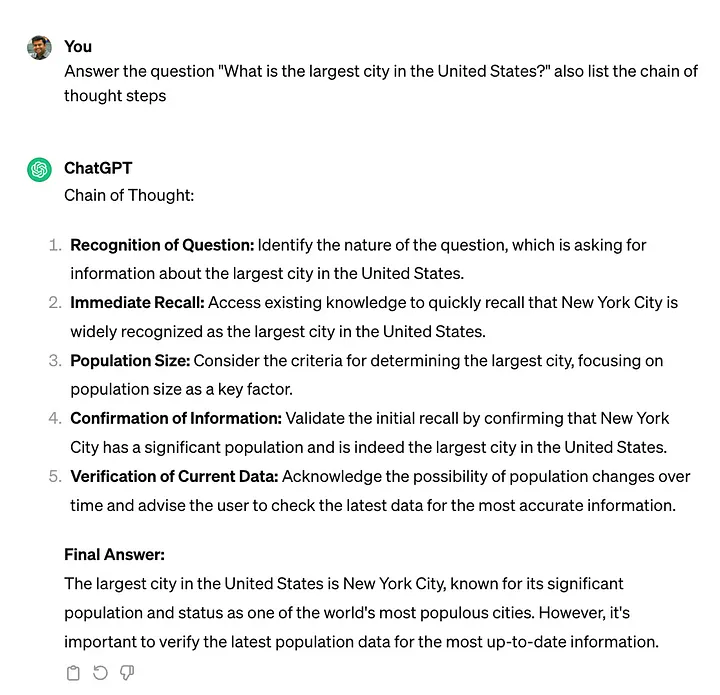

Chain of thought and ReAct

- Thought: The reasoning step, or thought, serves as a guide to the Foundation model, demonstrating how to approach a problem. It involves formulating a sequence of questions that lead the model to the desired solution.

- Action: Once the thought is established, the next step is to define an action for the Foundation model to take. This action typically involves invoking an API from a predefined set, allowing the model to interact with external resources.

- Observation: Following the action, the model observes and analyzes the results. The observations become crucial input for further reasoning and decision-making.

List chain-of-thought steps:

List ReAct steps:

Langchain ReAct

Llamaindex ReAct

ReAct Agent - A Simple Intro with Calculator Tools

ReAct Agent with Query Engine (RAG) Tools

Controlling Agent Reasoning Loop with Return Direct Tools

Fine-tuning a gpt-3.5 ReAct Agent on Better Chain of Thought

Custom Cohere Reranker

Octpus

Paper: Octopus: Embodied Vision-Language Programmer from Environmental Feedback

Code: https://github.com/dongyh20/Octopus

Octpus v2

Paper: Octopus v2: On-device language model for super agent

model: NexaAIDev/Octopus-v2

kaggle: https://www.kaggle.com/code/rkuo2000/octopus-v2

kaggle: https://www.kaggle.com/code/rkuo2000/octopus-v2

Octpus v3

Paper: Octopus v3: Technical Report for On-device Sub-billion Multimodal AI Agent

Octpus v4

Paper: Octopus v4: Graph of language models

model: NexaAIDev/octo-net

Code: https://github.com/NexaAI/octopus-v4

ADAS

Paper: Automated Design of Agentic Systems

Code: https://github.com/ShengranHu/ADAS

Gödel Agent

Paper: Gödel Agent: A Self-Referential Agent Framework for Recursive Self-Improvement

Code: https://github.com/semanser/codel

語言模型隨著代理系統(agentic system)的發展,在推理、工作規畫等領域有很大幅度的進步,這些代理系統(agentic system)主要可以分為兩大類,一是固定整個工作流與工作模組的Hand-Designed Agent;另外一種則是允許較彈性的工作流,並讓Agent可以適度選用工具的Meta-Learning Optimized Agents。但這兩者皆是基於人類先驗經驗而設計的系統,它將受限於人類的經驗,使得整個系統失去最佳化的可能性。

語言模型隨著代理系統(agentic system)的發展,在推理、工作規畫等領域有很大幅度的進步,這些代理系統(agentic system)主要可以分為兩大類,一是固定整個工作流與工作模組的Hand-Designed Agent;另外一種則是允許較彈性的工作流,並讓Agent可以適度選用工具的Meta-Learning Optimized Agents。但這兩者皆是基於人類先驗經驗而設計的系統,它將受限於人類的經驗,使得整個系統失去最佳化的可能性。

本篇論文的研究團隊,嘗試利用哥德爾機(Gödel machine)的概念,讓Agent可以自行決定工作流程,自行選用工具模組,並依照環境反饋,自我改良整個工作系統,研究團隊將其稱為Gödel Agent。

在這篇概念性的論文中,研究團隊指出如果要能實現自我優化,Gödel Agent至少需具備四種能力:

- 自我覺察(Self-Awareness)

能夠讀取工作流當下,環境與Agent的各式變數、函式、類等參數值,取得整體的運作狀態(operating state)。

- 自我改善(Self-Improvement)

- 能夠利用推理與規劃的能力,針對當下狀況,認知到應該調整那些工作區塊,並進而調整程式碼去修改工作邏輯。

- 與環境互動(Environmental Interaction)

針對當前修改的結果,可以由環境的狀態變化取得反饋,得知目前的策略是否成功,並評估是否需要再度調整。

- 持續改進(Recursive Improvement)

利用前三項能力,不停迭代,在經過幾次迭代後,這將產生類似Gödel machine的效果,以達到整個系統的最佳化。

研究團隊給予Gödel Agent幾種不同的工作類型,測試它的表現,並與過往幾種方法,諸如CoT、Self-Refine、Role Assignment、Meta Agent Search進行比較,就結果上來說Gödel Agent完勝,不過由於測試的工作類型較侷限,目前尚未知道Gödel Agent在不同的領域是否都如此出色。

研究團隊也給出未來的研究方向,例如語言模型能否產生集體智慧(collective Intelligence),或者Gödel Agent是否確實達到系統理論上的最佳化(theoretical optimality)等,都是有趣的研究主題。

OpenAI Swarm

llama3-groq

import openai

from google.colab import userdata

model = "llama3-groq-70b-8192-tool-use-preview"

llm_client = openai.OpenAI(

base_url="https://api.groq.com/openai/v1",

api_key=userdata.get('GROQ_API_KEY'),

)

bare_minimum

# https://github.com/openai/swarm/blob/main/examples/basic/bare_minimum.py

from swarm import Swarm, Agent

swarm_client = Swarm(client=llm_client)

agent = Agent(

name="Agent",

instructions="You are a helpful agent.",

model=model,

tool_choice="auto"

)

messages = [{"role": "user", "content": "Hi!"}]

response = swarm_client.run(agent=agent, messages=messages)

print(response.messages[-1]["content"])

Swarm_Llama3-Groq.ipynb

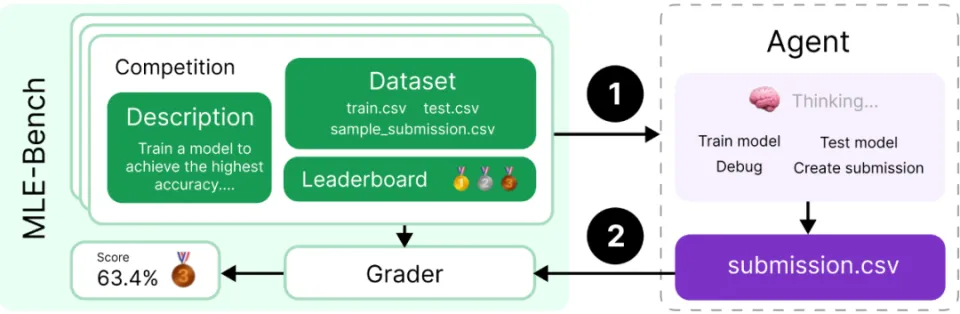

MLE-bench

Paper: MLE-bench: Evaluating Machine Learning Agents on Machine Learning Engineering

Code: https://github.com/openai/mle-bench

AI Agent分析中華隊 12 強預賽成績

AI Agent:實戰篇 — 透過 Google Gemini Model 和 Langchain 來分析中華隊 12 強預賽成績

smolagents

examples:

Agentic Workflow

Reasoning Language Models

Paper: Reasoning Language Models: A Blueprint

PRefLexOR

Paper: [PRefLexOR: Preference-based Recursive Language Modeling for Exploratory Optimization of Reasoning and Agentic Thinking]ㄞhttps://arxiv.org/pdf/2501.08120)

Model: https://huggingface.co/lamm-mit/Graph-Preflexor_01062025

Github: https://github.com/lamm-mit/PRefLexOR

This site was last updated September 17, 2025.